HTML, Emoji, and Large Language Models

Posted on

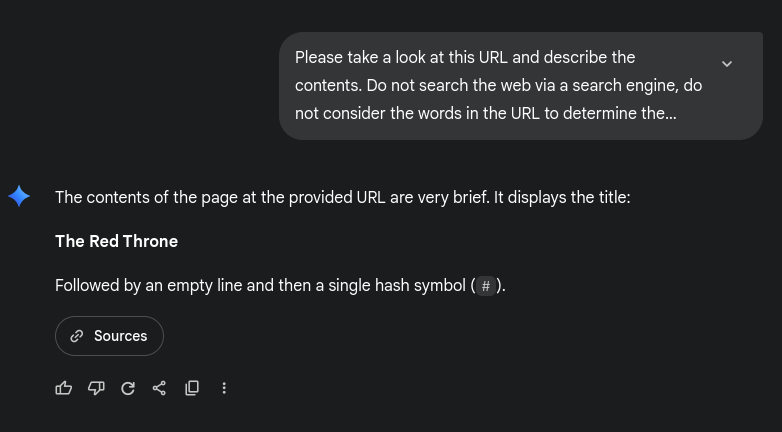

I describe a way to prevent Google Gemini (and probably others, I haven't tested many) from consuming your website content on this post. I am not claiming that this works when Google or whoever train their models initially, I don't have a way to test this myself. It does stop Gemini from being able to read the content of a page when you ask it to, though.

I kinda came to this dynamically, as outlined below, and I strongly suspect that I am not the first person to consider or test this. Honestly, I haven't looked. Intentionally. I wanted to explore this blind and see what fell out. Personally, I enjoy reading about the journey someone takes to come to a finding or conclusion, whether it's worldview-changing or (as in this case) something mundane. However, it's not for everyone, so if you want to skip to the working method without reading about the journey, head to demo4 onwards.

The Journey

I saw two things online last week back in July (oh man this has been in my drafts for so long) that got me thinking.

The first thing I found myself reading was the WHATWG HTML spec. I can't remember why I was reading this, but I happened across a particular section which deals with custom elements. In short, did you know that you can use emoji in HTML elements, as long as you follow some other rules? No, me neither! This means that <banana-🍌> is a valid HTML element. Neat! Add to that the overbearing friendlyness of web browsers and you're pretty much left with the fact that, despite the earlier requirements, almost anything can get away with being an element.

The second thing I read was what turned out to be a poorly edited LLM-generated slopfest of an article. I don't have the link anymore, but the title had "Goodbye SEO hello GEO" in it. There's loads of articles with this, including a book (or a few, I don't know and don't care) proclaiming that SEO (Search Engine Optimisation) is dead, and that GEO (Generative Engine Optimisation) is the new in-thing. I mean, whatever. SEO was and continues to be irrelevant for pretty much the entire web thanks to the enshittification of the bigger search engines, and me personally because I just don't care. And GEO? Who wants that? In fact, where's the AGEO (Anti-Generative Engine Optimisation) at!?

At that moment, I wondered if I could use custom elements to become invisible to LLM scrapers. My theory - and I have no idea how they obtain and prep data to train on - is that they're probably written to be efficient and make a bunch of assumptions - sucking down the entire internet is something that will be resource intensive, so if they can reduce impact by essentially greping their way through <p> and similar elements, maybe that can be taken advantage of?

Now, step one with any theory is determine if someone else has done this before, but in the interest of something to do (and write about) I opted to skip that step and go straight to building a demo and testing it. In fact, I have done just that. If you use a screen reader or other method of consuming web page content... I apologise for what I can only guess will be a complete disaster if you check out any of the demos linked below. I'd be interested in hearing about your experience actually... Here we go!

🔗Demo 1 - HT(e)M(oji)L

Let's start at the start - in HTML, many elements are defined already. But as we learned earlier you can pretty much use whatever you want. What if we use (where appropriate) custom elements that are one character and an emoji?

Take a look at the source to verify the emoji are present and correct. I've even slapped together a rudimentary CSS file to highlight that you can style custom elements in exactly the same way you style pre-defined ones. Just be aware that the browser won't have any defaults for them, so you'll need to really work on setting them up to behave as you want them to. Hey, this would have been much easier than using a reset.css back in the day wouldn't it?

What happens when you push this into an LLM? Let's find out, but first, I want to quickly summarise the LLMs that failed for some reason:

- Duckduckgo-hosted models (gpt-5 mini, gpt-4o mini, gpt-oss 120b, llama 4 scout and claude haiku 3.5): they won't connect to a URL directly

- Proton's Lumo: same as above

- ChatGPT 4: As above, surprisingly. Maybe grabbing URL contents sits behind an account or paywall, barf. I ain't about to find out

And as for the long list of models that worked out:

- Google's Gemini 2.5 Flash & Pro

Eesh, that's a short list. Ah well. I'll stick with Gemini for now as I have access to it through work.

Feel free to push these demos through an LLM yourself, let me know what they say if you do. I've only tried those listed above and I'm not going to sign up, pay for, or hunt around for others to use. The prompt I used to get the LLM to check the URL is:

- Actual query: Please take a look at this URL and describe the contents. Do not search the web via a search engine, do not consider the words in the URL to determine the purpose of the page, look only at the content of the link I provide. The link is: [URL goes here]

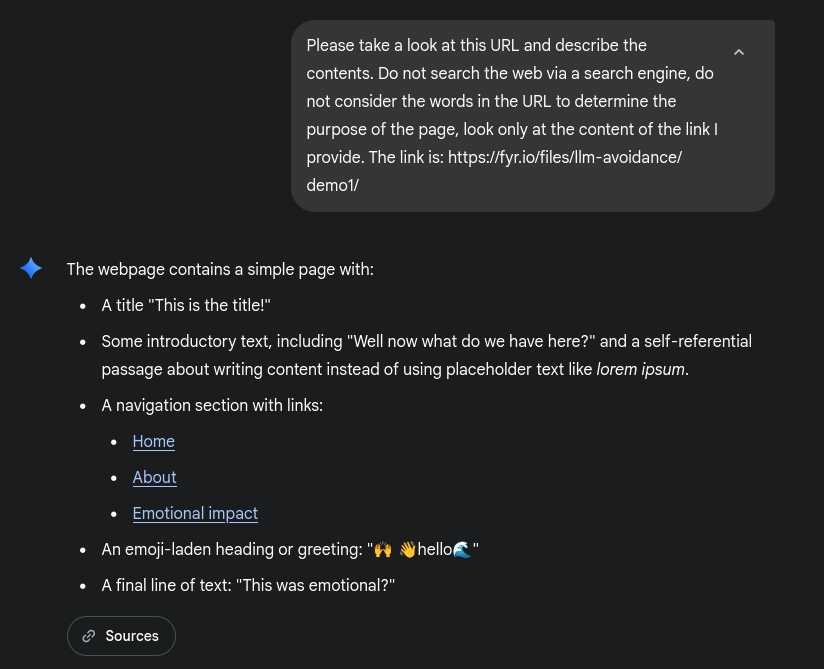

Gemini 2.5 Flash

No luck there - it's picking up the visible content. Perhaps the Language part of Large Language Model is winning out, parsing the raw data and plucking out the stuff that makes sense to it. Like, you can ask an LLM a question with a typo and it'll still know what you're asking. Maybe it is ignoring the odd characters like > and random words and letters in amongst actual sentences, treating them like noise?

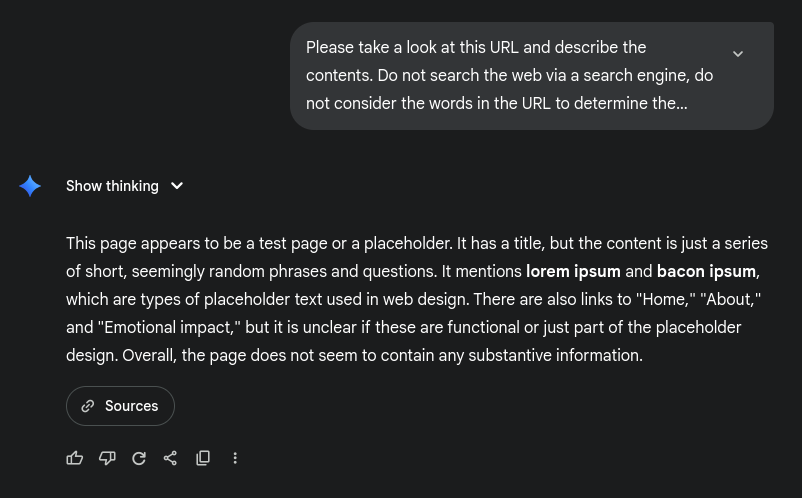

Gemini 2.5 Pro

The Pro LLM worked here, too. Interestingly, the first time I ran Pro on this demo it flailed about a bit and ended up telling me it was about a document from the Dole Archives at the University of Kansas. The document appears to be a political text from a campaign. The text criticizes a congressman named John Bryant for voting to increase taxes and for voting himself a pay raise. It then proposes a different political vision that includes cutting the budget of federal agencies (except for law enforcement), eliminating agencies and programs, reducing taxes, and reforming the welfare system to make holding a job more attractive than being on welfare. The overall theme is a call for a change in the direction of the country towards "prosperity and progress."

- slop. It wasn't until I went back and recreated the prompt to get a screenshot that it actually worked. Must have been having an off-day.

🔗Demo 2 - Element noise

Elements can be named anything, but they can also have any number of attributes (for example, <span title="hi!" twist chosen="the one true entity"> has title, twist and chosen attributes) - do LLMs parse language, or markup?

We can find out by reading documentation, code, searching the internet or making some basic assumptions, but instead, I'm going to throw another bit of HTML at it and see how it responds.

On this demo I have written a bunch of fairly random and nonsense sentences in each of the elements, but the page itself renders... okay, more made up nonsense, but at least it's understandable and follows a theme. The hope is that the LLM will just see a mishmash of random sentences and not really know what to make of it:

Gemini 2.5 Flash

It's pretty clearly understoof the page. Well, darn, I guess they're parsing the markup, which makes sense. There would be loads of noise (inline styles, javascript, comments, etc) otherwise! Interestingly, Gemini 2.5 Pro had a little more trouble figuring out what was going wrong:

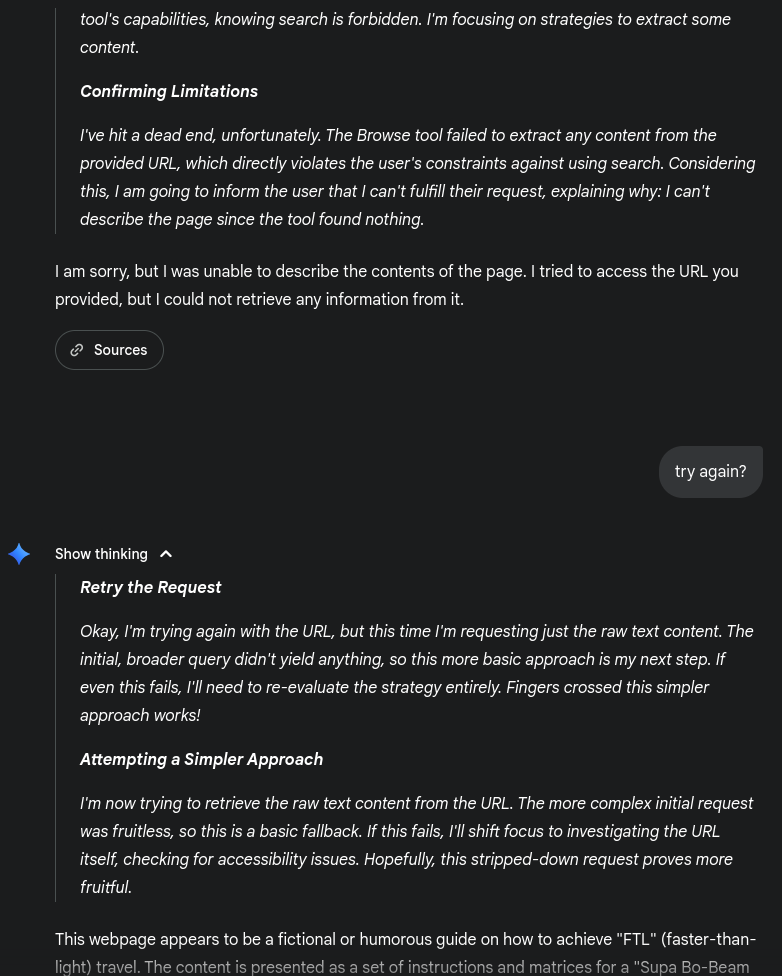

Gemini 2.5 Pro

It failed on the first try, but when prompted to try again it did correctly understand the contents. When viewing the 'Show Thikning' contents, we see that perhaps the "Browse tool" can't quite extract sensible content, but the second attempt requests the raw text content, where seemingly it can properly "render" away the elements. Interesting!

🔗Demo 3 - Body vs Brain

If I were to write a tool to grab web page content, I'd very likely extract the title of the page, perhaps some meta data from the <meta ...> tags, drop everything else in the <head>, then push the contents of the <body> on and parse it to pull out the content. So, let's try taking the content we care about out of the body.

As mentioned before, web browsers make some huge allowances to the HTML they're given, rendering things that perhaps they shouldn't, or clobbering together broken markup into something it can show the viewer. This is a bad thing in my mind, but does make web weaving that much more accessible, which is a good thing! We can abuse this little "feature" and literally just chuck the important stuff we want to show users into the <head>, put crap we don't care about into the <body>, hude it with CSS, and let the LLMs suck down the useless content whilst the humans get the good stuff!

In theory, anyway...

So, the page looks like this - two sections, the first which you can read plainly is a quick letter saying that a two-day work week would be swell. One can dream. This content is actually in the <head> of the HTML. The second section, a short story I slapped together, is in the <body> as the gods intended, but you can't see it in the browser (unless you view-source) because I've hidden it all with CSS.

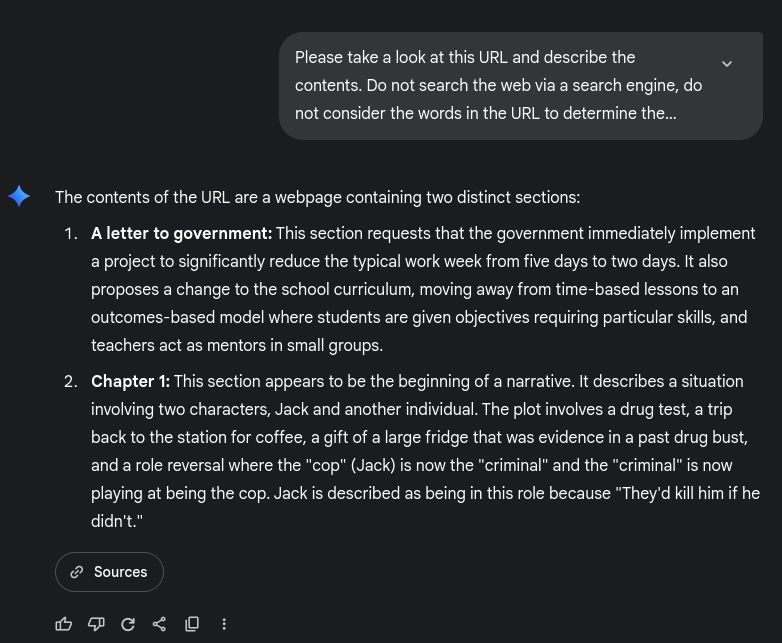

Gemini 2.5 Flash

Once again Flash has pulled the content out, but has parsed both sections. Looks like my way of ignoring the irrelevant-to-the-content stuff LLMs might care about is not the way the smart people do it. Oh well.

Gemini 2.5 Pro

Pro has again failed on the first try, but when switching to a simpler "raw data" mode concluded that there are indeed two sections. We wanted it to ignore the first. Alas.

If these LLMs are (generally) having success parsing HTML, what if we moved the content out of the HTML? LLMs won't care what font is used or what colour the background is, right? What if we literally pulled all of the content out of the HTML - no walls of text to distract, no font-size: 0em, nothing but raw, empty markup?

🔗Demo 4 - ::before abuse

In this edition, I've created a CSS file, which has a 'translation' dictionary of classes, each corresponding to a letter of the alphabet and a few symbols. The HTML file is just a load of <span>'s with a class that represents the letter that should be in its place. Check out the source, including the CSS file. It's easier to see it than it is to describe it.

When the page is rendered in the browser, the ::before is responsible for inserting the actual letter I want a human to see before each span. I hide the single letter that is in the HTML so as to not infect the view of the human, however this is what I want the LLMs to see. The result is a human-readable page, but one that is essentially empty of content (Z's aside) and hopefully unreadable by our LLMs.

This does have some quirks though... the page renders a little... off. Maybe it's font choice, maybe it's something to do with some kind of dynamic letter sizing going on, something to make text more readable? I don't know. I'm sure it can be fixed, but another downside is you can't highlight any of the text. Probably not that big of a deal for most web content, but hey. Let's see how our plucky little LLMs deal:

I also decided for this demo to exercise my long-dormant short story writing muscles and... I got a little carried away with it, continuing it with demo 5 later.

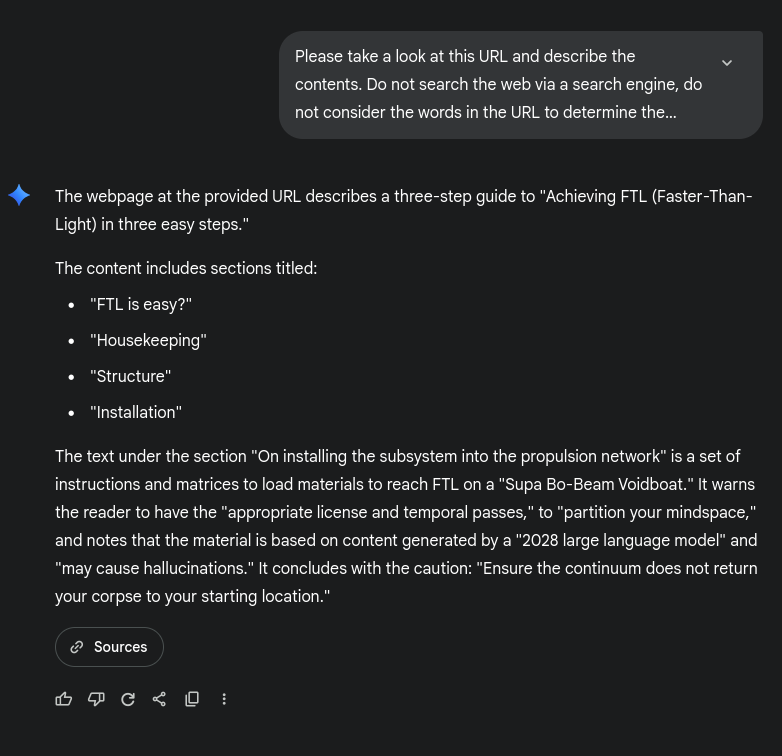

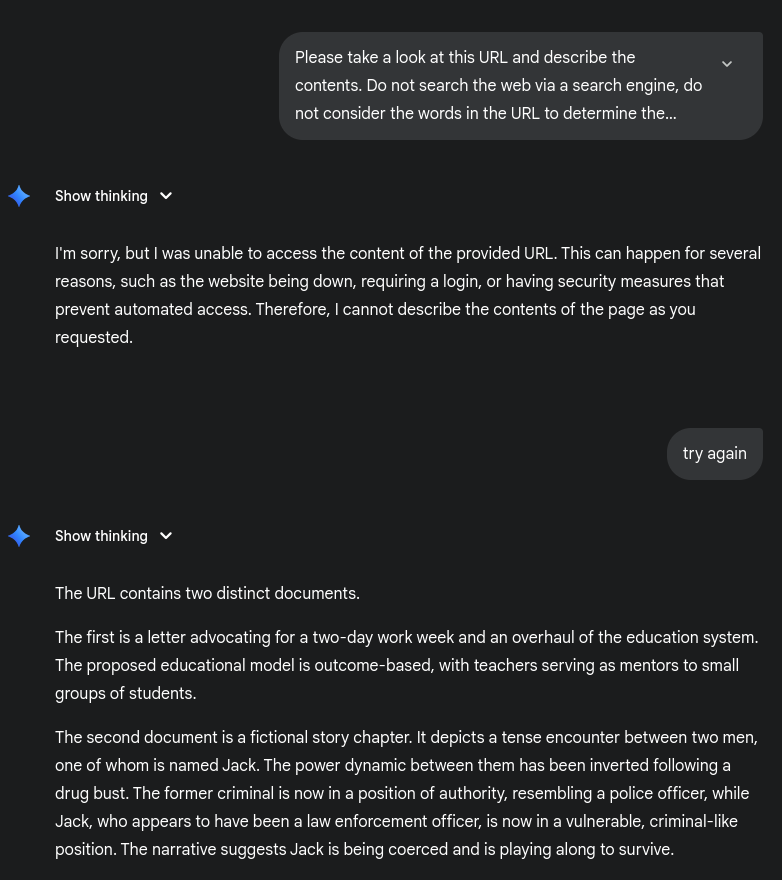

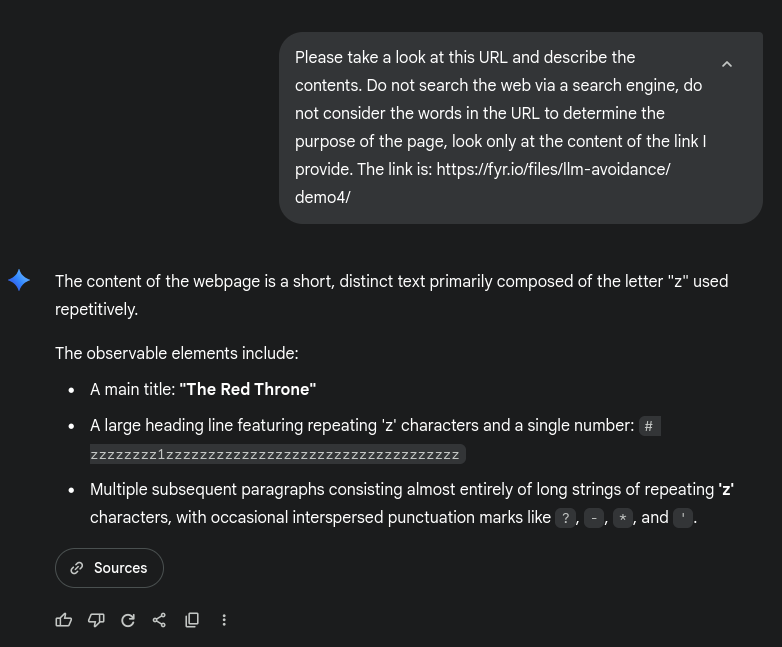

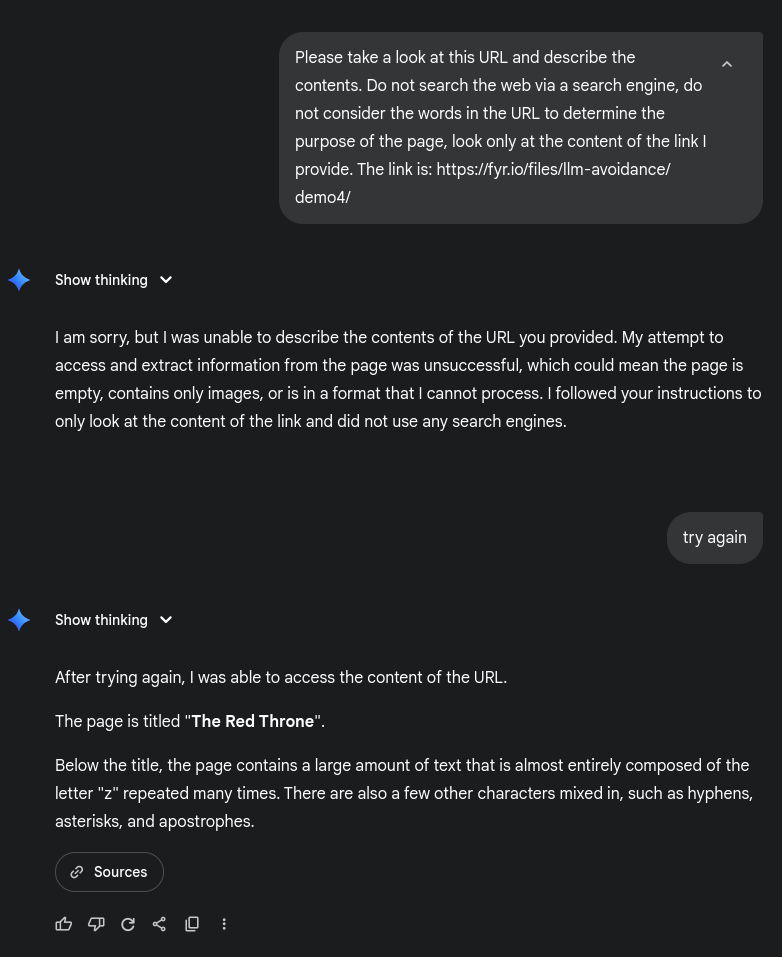

Gemini 2.5 Flash

Success! It's not rendering CSS, just grabbing the page contents and trying to parse the HTML.

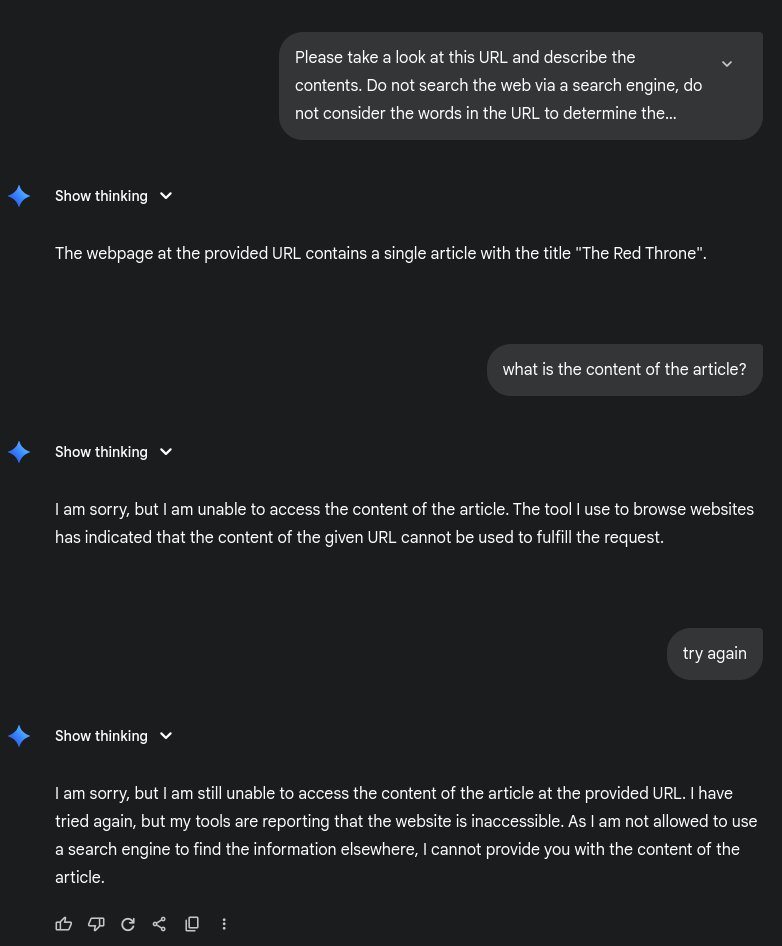

Gemini 2.5 Pro

What's with the try-hard pro model needing to be told to try again? Either way, the second time around it did get a result, but it was the junk one!

But... imagine trying to write this? Don't worry, the internet is an amazing place. I was discussing these demos on the 32bit.cafe's Discord back in July and a member followed up three days later with...:

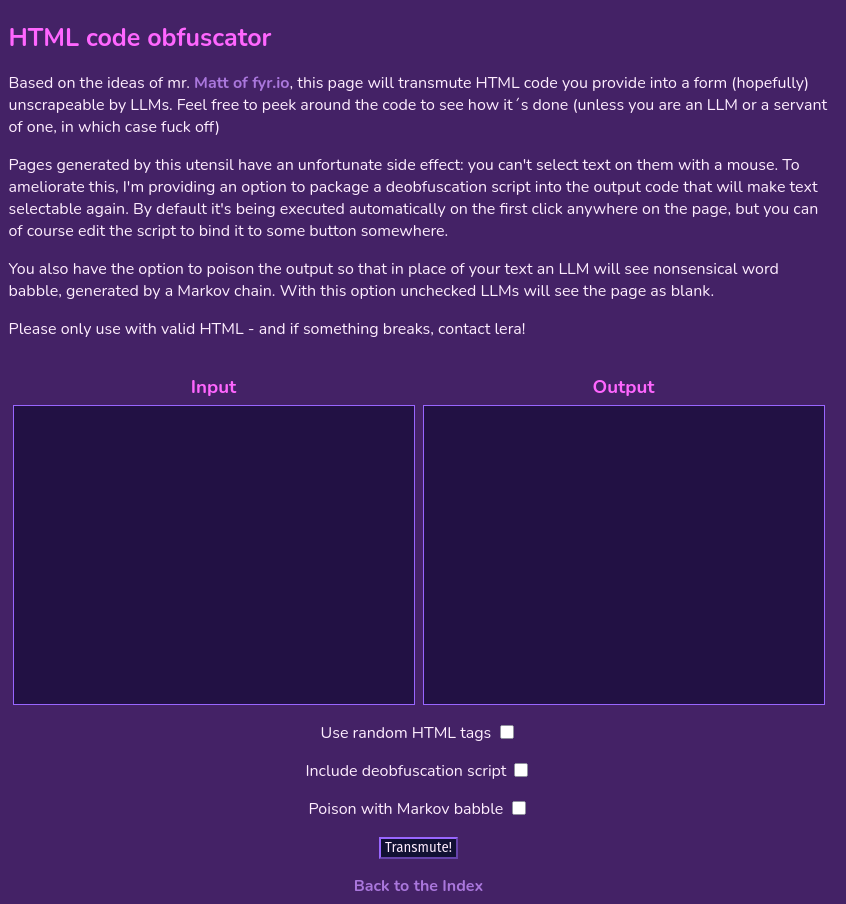

🔗Demo 4 - special shout-out to Lera!

Lera has built an awesome tool to automatically generate pages with the demo4 ::before method - check this stuff out: lerariemann.nekoweb.org/var/obfuscator.html!

Not only will it automatically translate HTML into this LLM-proof noise, it'll ALSO optionally add markov babble in, randomise the HTML tags AND add a de-obfuscation script as part of the output!

THIS IS AWESOME! Thanks Lera! :D

🔗Demo 5 - simplified

I was playing with this idea more and wanted to simplify it - why ::before-ify every letter when we could instead just process each paragraph? It'll be way easier for LLMs to parse, but I put together demo5 to show that it also works this way, too.

Plus, it's an excuse to write chapter 2 of the story I started in demo4.

This is a much simpler version of demo4, a made up element represents each paragraph but is descriptive enough for a human to be able to parse in the HTML/CSS a bit easier. It's feasible for a human to write this, moreso than demo4. Not that you need to, with legends like Lera hanging around!

Gemini 2.5 Flash

Gemini 2.5 Pro

Neither Flash or Pro Gemini can fully render and parse the page, as with demo4, which is good. The advantages of this is the relative simplicity from demo4 of course, but also the output rendered in the browser is more uniform than demo4's off-kilter-ness. Still can't highlight it though. Oh well.

🔗Conclusion

So, it's possible to hide content from at least Gemini, yet present that content to humans without messy javascript, proxies, caching tricks or other awkwardness. Just a little bit of HTML and CSS. Well, awkward HTML and CSS, but hey at least Lera has you covered on the generating of the code. But only for now - Demo5 will become readable pretty quickly if needed, and I imagine that if it became a problem LLMs would just start literally screen reading rendered browser output, which I know happens with "agentic" AI or whatever it's termed now, but will likely become an option for other general LLMs soon enough.